Authors:

(1) Omri Avrahami, Google Research and The Hebrew University of Jerusalem;

(2) Amir Hertz, Google Research;

(3) Yael Vinker, Google Research and Tel Aviv University;

(4) Moab Arar, Google Research and Tel Aviv University;

(5) Shlomi Fruchter, Google Research;

(6) Ohad Fried, Reichman University;

(7) Daniel Cohen-Or, Google Research and Tel Aviv University;

(8) Dani Lischinski, Google Research and The Hebrew University of Jerusalem.

Table of Links

- Abstract and Introduction

- Related Work

- Method

- Experiments

- Limitations and Conclusions

- A. Additional Experiments

- B. Implementation Details

- C. Societal Impact

- References

5. Limitations and Conclusions

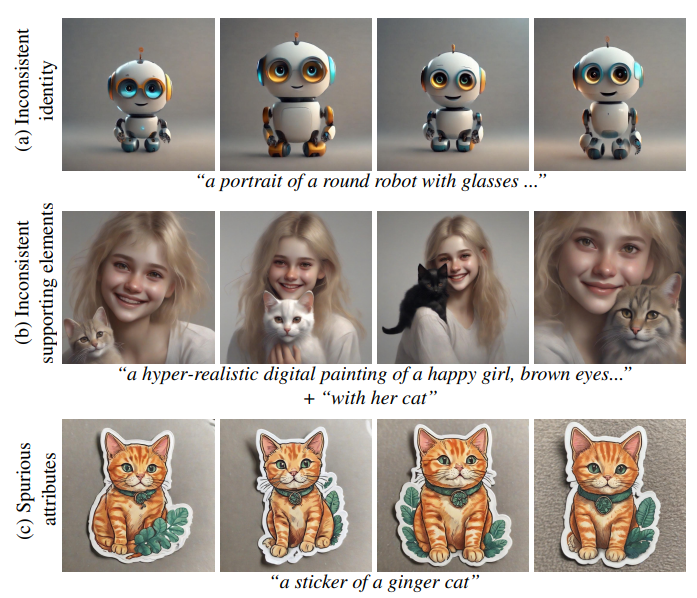

We found our method to suffer from the following limitations: (a) Inconsistent identity — in some cases, our method is not able to converge to a fully consistent identity (without overfitting). As demonstrated in Figure 8(a), when trying to generate a portrait of a robot, our method generated robots with slightly different colors and shapes (e.g., different arms). This may result from a prompt that is too general, for which identity clustering (Section 3.1) is not able to find a sufficiently cohesive set. (b) Inconsistent supporting characters/elements— although our method is able to find a consistent identity for the character described by the input prompt, the identities of other characters, related to the input character (e.g., their pet), might be inconsistent. For example, in Figure 8(b) the input prompt p to our method described only the girl, and when asked to generate the girl with her cat, different cats were generated. In addition, our framework does not support finding multiple concepts concurrently [6]. (c) Spurious attributes — we found that in some cases, our method binds additional attributes, which are not part of the input text prompt, with the final identity of the character. For example, in Figure 8(c), the input text prompt was “a sticker of a ginger cat”, however, our method added green leaves to the generated sticker, even though it was not asked to do so. This stems from the stochastic nature of the text-to-image model — the model added these leaves in some of the stickers generated during the identity clustering stage (Section 3.1), and the stickers containing the leaves happened to form the most cohesive set ccohesive. (d) Significant computational cost — each iteration of our method involves generating a large number of images, and learning the identity of the most cohesive cluster. It takes about 20 minutes to converge into a consistent identity. Reducing the computational costs is an appealing direction for further research.

In conclusion, in this paper we offered the first fully-automated solution to the problem of consistent character generation. We hope that our work will pave the way for future advancements, as we believe this technology of consistent character generation may have a disruptive effect on numerous sectors, including education, storytelling, entertainment, fashion, brand design, advertising, and more.

Acknowledgments. We thank Yael Pitch, Matan Cohen, Neal Wadhwa and Yaron Brodsky for their valuable help and feedback.

This paper is available on arxiv under CC BY-NC-ND 4.0 DEED license.