Authors:

(1) Sebastian Dziadzio, University of Tübingen ([email protected]);

(2) Çagatay Yıldız, University of Tübingen;

(3) Gido M. van de Ven, KU Leuven;

(4) Tomasz Trzcinski, IDEAS NCBR, Warsaw University of Technology, Tooploox;

(5) Tinne Tuytelaars, KU Leuven;

(6) Matthias Bethge, University of Tübingen.

Table of Links

2. Two problems with the current approach to class-incremental continual learning

3. Methods and 3.1. Infinite dSprites

4.1. Continual learning and 4.2. Benchmarking continual learning

5.1. Regularization methods and 5.2. Replay-based methods

5.4. One-shot generalization and 5.5. Open-set classification

Conclusion, Acknowledgments and References

3.2. Disentangled learning

With our procedural benchmark generator, we can test continual learning methods over time frames an order of magnitude longer than those covered by existing datasets. As previously mentioned, we hypothesize that to learn efficiently over such time horizons, we need to clearly distinguish between the generalization mechanism that needs to be learned and the class-specific information that has to be memorized. We start by observing that human learning is likely characterized by such separation. Take face recognition, for example. A child is able to memorize the face of its parent but can still get confused by an unexpected transformation, as evidenced by countless online videos of babies failing to recognize their fathers after a shave. Once we learn the typical identity preserving transformations that a face can undergo, we need only to memorize the particular features of any new face to instantly generalize over many transformations, such as

facial expression, lighting, three-dimensional rotation, scale, perspective projection, or a change of hairstyle. Note that while we encounter new faces every day, these transforms remain consistent and affect every face similarly.

Inspired by this observation, we aim to disentangle generalization from memorization by explicitly separating the learning module from the memory buffer in our model design. The memory buffer stores a single exemplar image of each encountered shape. We assume these are given to us by an oracle throughout training, but it would be possible to bootstrap the buffer with a few initial exemplars. The equivariance learning module is a neural network designed to learn the general transformations present in the data.

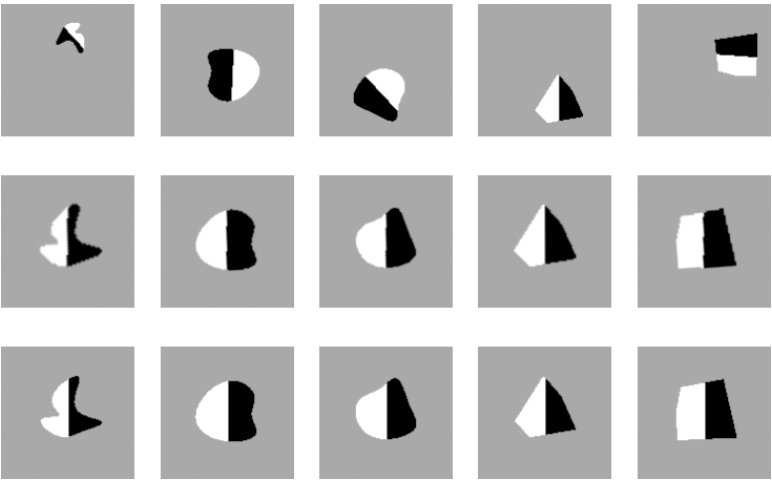

Discussion The disentangled learning approach has a number of advantages. First, by learning transformations instead of class boundaries, we reformulate a challenging class-incremental classification scenario as a domain-incremental FoV regression learning problem [37]. Since the transformations affect every class in the same way, they are easier to learn in a continual setting. We show that this approach is not only less prone to forgetting but exhibits significant forward and backward transfer. In other words, the knowledge about regressing FoVs is efficiently accumulated over time. Second, the exemplar buffer is a fully explainable representation of memory that can be explicitly edited: we can easily add a new class or completely erase a class from memory by removing its exemplar. Finally, we show experimentally that our method generalises instantly to new shapes with just a single exemplar and works reliably in an open-set classification scenario. Figure 3 illustrates the stages of the classification mechanism: five input images, their corresponding normalization network outputs, and closest exemplars from the buffer.

This paper is available on arxiv under CC 4.0 license.