Authors:

(1) An Yan, UC San Diego, [email protected];

(2) Zhengyuan Yang, Microsoft Corporation, [email protected] with equal contributions;

(3) Wanrong Zhu, UC Santa Barbara, [email protected];

(4) Kevin Lin, Microsoft Corporation, [email protected];

(5) Linjie Li, Microsoft Corporation, [email protected];

(6) Jianfeng Wang, Microsoft Corporation, [email protected];

(7) Jianwei Yang, Microsoft Corporation, [email protected];

(8) Yiwu Zhong, University of Wisconsin-Madison, [email protected];

(9) Julian McAuley, UC San Diego, [email protected];

(10) Jianfeng Gao, Microsoft Corporation, [email protected];

(11) Zicheng Liu, Microsoft Corporation, [email protected];

(12) Lijuan Wang, Microsoft Corporation, [email protected]**.**

Editor’s note: This is the part 4 of 13 of a paper evaluating the use of a generative AI to navigate smartphones. You can read the rest of the paper via the table of links below.

Table of Links

- Abstract and 1 Introduction

- 2 Related Work

- 3 MM-Navigator

- 3.1 Problem Formulation and 3.2 Screen Grounding and Navigation via Set of Mark

- 3.3 History Generation via Multimodal Self Summarization

- 4 iOS Screen Navigation Experiment

- 4.1 Experimental Setup

- 4.2 Intended Action Description

- 4.3 Localized Action Execution and 4.4 The Current State with GPT-4V

- 5 Android Screen Navigation Experiment

- 5.1 Experimental Setup

- 5.2 Performance Comparison

- 5.3 Ablation Studies

- 5.4 Error Analysis

- 6 Discussion

- 7 Conclusion and References

3.3 History Generation via Multimodal Self Summarization

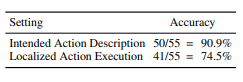

Set-of-Mark prompting bridges the gap between text outputs from GPT-4V and executable localized actions. However, the agent’s ability to maintain a Table 1: Zero-shot GPT-4V iOS screen navigation accuracy on the “intended action description” and “localized action execution” tasks, respectively.

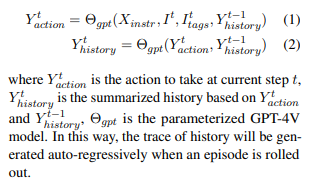

historical context is equally important in successfully completing tasks on smartphones. The key difficulty lies in devising a strategy that allows the agent to effectively determine the subsequent action at each stage of an episode, taking into account both its prior interactions with the environment and the present state of the screen. The naive approach of feeding all historical screens or actions into the agent is computationally expensive and may decrease the performance due to information overload. For example, screens at each step can change rapidly, and most of the historical screen information is not useful for reasoning about future actions. Humans, on the other hand, can keep track of a short memory of the key information after performing a sequence of actions. We aim to find a more concise representation than a sequence of screens or actions. Specifically, at each time step, we ask GPT-4V to perform multimodal self summarization, which converts the historical actions and current step information into a concise history in the form of natural language, which is formulated as follows:

This paper is available on arxiv under CC BY 4.0 DEED license.