Authors:

(1) An Yan, UC San Diego, [email protected];

(2) Zhengyuan Yang, Microsoft Corporation, [email protected] with equal contributions;

(3) Wanrong Zhu, UC Santa Barbara, [email protected];

(4) Kevin Lin, Microsoft Corporation, [email protected];

(5) Linjie Li, Microsoft Corporation, [email protected];

(6) Jianfeng Wang, Microsoft Corporation, [email protected];

(7) Jianwei Yang, Microsoft Corporation, [email protected];

(8) Yiwu Zhong, University of Wisconsin-Madison, [email protected];

(9) Julian McAuley, UC San Diego, [email protected];

(10) Jianfeng Gao, Microsoft Corporation, [email protected];

(11) Zicheng Liu, Microsoft Corporation, [email protected];

(12) Lijuan Wang, Microsoft Corporation, [email protected].

Editor’s note: This is the part 6 of 13 of a paper evaluating the use of a generative AI to navigate smartphones. You can read the rest of the paper via the table of links below.

Table of Links

- Abstract and 1 Introduction

- 2 Related Work

- 3 MM-Navigator

- 3.1 Problem Formulation and 3.2 Screen Grounding and Navigation via Set of Mark

- 3.3 History Generation via Multimodal Self Summarization

- 4 iOS Screen Navigation Experiment

- 4.1 Experimental Setup

- 4.2 Intended Action Description

- 4.3 Localized Action Execution and 4.4 The Current State with GPT-4V

- 5 Android Screen Navigation Experiment

- 5.1 Experimental Setup

- 5.2 Performance Comparison

- 5.3 Ablation Studies

- 5.4 Error Analysis

- 6 Discussion

- 7 Conclusion and References

4.2 Intended Action Description

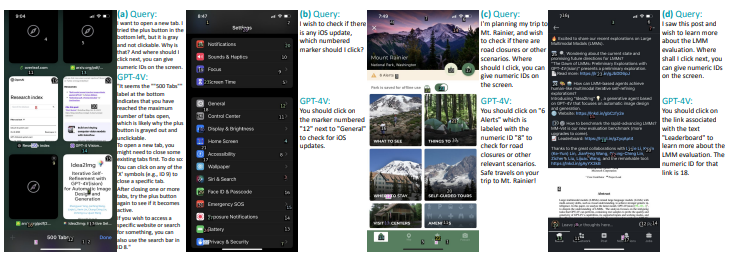

Table 1 reports an accuracy of 90.9% on generating the correct intended action description, quantitatively supporting GPT-4V’s capability in understanding the screen actions to perform (Yang et al., 2023c; Lin et al., 2023). Figure 1 showcases representative screen understanding examples. Given a screen and a text instruction, GPT-4V gives a text description of its intended next move. For example, in Figure 1(a), GPT-4V understands the Safari browser limits of “the limit of 500 open tabs,” and suggests “Try closing a few tabs and then see if the "+" button becomes clickable.” Another example is telling the procedure for iOS update: “You should click on "General" and then look for an option labeled "Software Update” in (b). GPT-4V also effectively understands complicated screens with multiple images and icons. For example, in (c), GPT-4V mentions, “For information on road closures and other alerts at Mt. Rainier, you should click on "6 Alerts" at the top of the screen.” Figure 1(d) gives an example in online shopping, where GPT-4V suggests the correct product to check based on the user input of the desired “wet cat food.”

This paper is available on arxiv under CC BY 4.0 DEED license.