Authors:

(1) Sebastian Dziadzio, University of Tübingen ([email protected]);

(2) Çagatay Yıldız, University of Tübingen;

(3) Gido M. van de Ven, KU Leuven;

(4) Tomasz Trzcinski, IDEAS NCBR, Warsaw University of Technology, Tooploox;

(5) Tinne Tuytelaars, KU Leuven;

(6) Matthias Bethge, University of Tübingen.

Table of Links

2. Two problems with the current approach to class-incremental continual learning

3. Methods and 3.1. Infinite dSprites

4.1. Continual learning and 4.2. Benchmarking continual learning

5.1. Regularization methods and 5.2. Replay-based methods

5.4. One-shot generalization and 5.5. Open-set classification

Conclusion, Acknowledgments and References

2. Two problems with the current approach to class-incremental continual learning

We begin by addressing two aspects of current continual learning research that motivate our contributions.

Benchmarking Continual learning datasets are typically limited to just a few tasks and, at most, a few hundred classes. In contrast, humans can learn and recognise thousands of novel objects throughout their lifetime. We argue that as the continual learning community we should focus more on scaling the number of tasks in our benchmarks. We show that when tested over hundreds of tasks standard methods inevitably fail: the effect of regularization decays over time, adding more parameters quickly becomes unpractical, and storing and replaying old examples causes a rapid increase in both memory and compute. Moreover, to tackle individual sub-problems in continual learning, such as the influence of task similarity on forgetting, the role of disentangled representations, and the influence of hard task boundaries, we need to be able to flexibly create datasets that let us isolate these problems. We should also step away from the static training and testing stages and embrace streaming settings where the model can be evaluated an any point. Finally, to advance beyond classification tasks, we need richer ground truth data than just class labels.

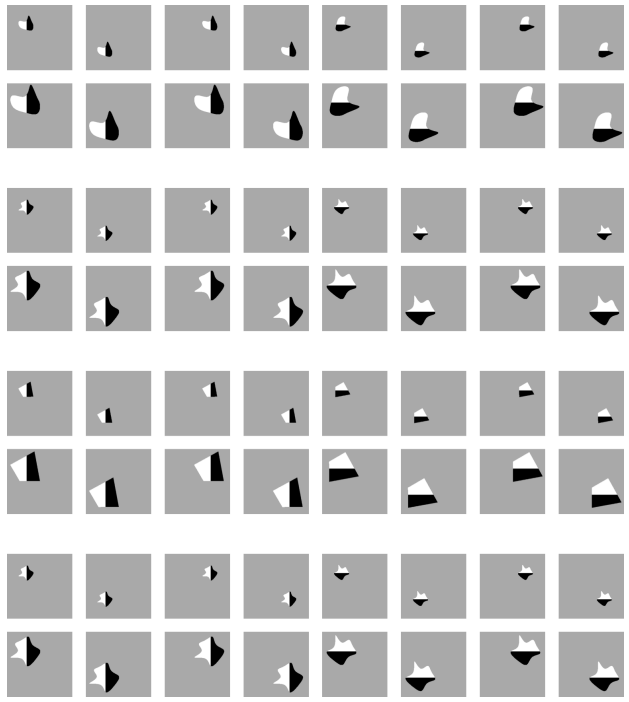

These observations motivated us to create a novel evaluation protocol. Taking inspiration from object-centric disentanglement libraries [8, 19], we introduce the infinite dSprites (idSprites) framework that allows for procedurally generating virtually infinite streams of data while retaining full control over the number of tasks, their difficulty and respective similarity, and the nature of boundaries between them. We hope that it will be a useful resource to the community and unlock new research directions in continual learning.

Invariant representations Continual learning methods are usually benchmarked on class-incremental setups, where a classification problem is split into several tasks to be solved sequentially [37]. Note that the classification learning objective is invariant to identity-preserving transformations of the object of interest, such as rotation, change of lighting, or perspective projection. Unsurprisingly, the most successful discriminative learning architectures, from AlexNet [16] to ResNet [9], learn only features that are relevant to the classification task [11, 35] and discard valuable information about universal transformations, symmetries, and compositionality. In doing so, they entangle the particular class information with the knowledge about generalization mechanisms and represent both in the weights of the model. When a new task arrives, there is no clear way to update the two separately.

In this paper, we reframe the problem and recognize that

the information about identity-preserving transformations, typically discarded, is important for transfer across tasks. For instance, a change in illumination affects objects of various classes similarly. Understanding this mechanism would lead to better generalization on future classes. Consequently, we suggest that modeling these transformations is key to achieving positive forward and backward transfer in continual classification. Symmetry transformations, or equivariances, offer a structured framework for this kind of modeling, which we elaborate on in the subsequent section.

This paper is available on arxiv under CC 4.0 license.