Table of Links

2 COCOGEN: Representing Commonsense structures with code and 2.1 Converting (T,G) into Python code

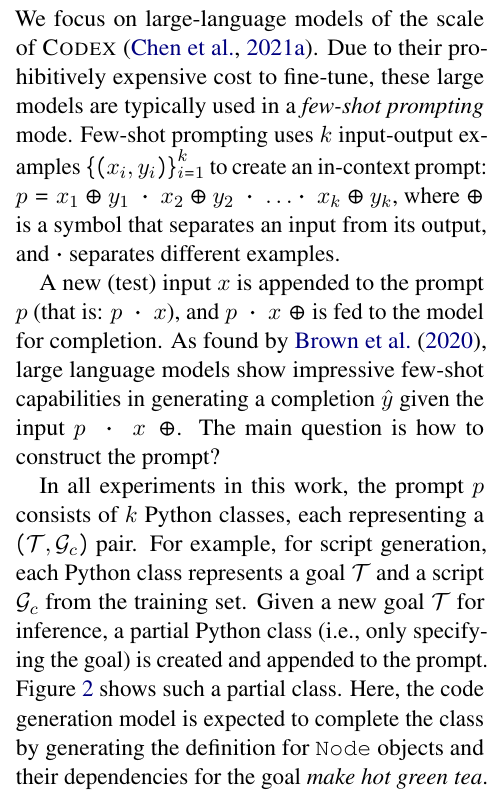

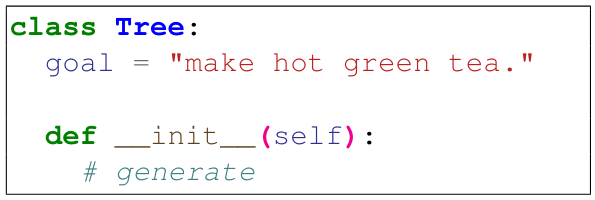

2.2 Few-shot prompting for generating G

3 Evaluation and 3.1 Experimental setup

3.2 Script generation: PROSCRIPT

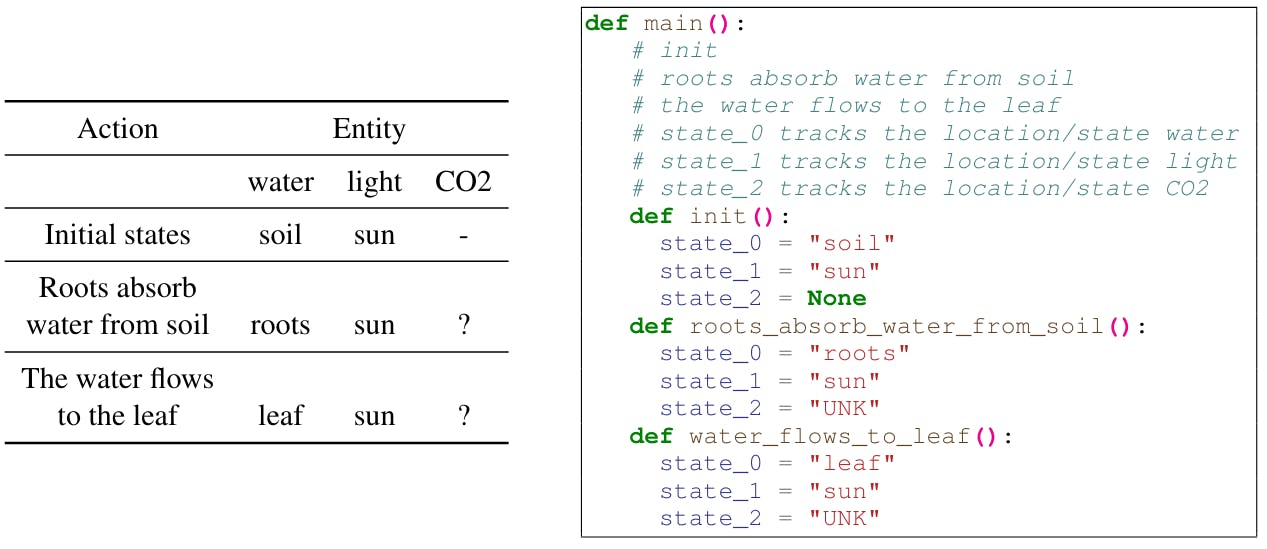

3.3 Entity state tracking: PROPARA

3.4 Argument graph generation: EXPLAGRAPHS

6 Conclusion, Acknowledgments, Limitations, and References

A Few-shot models size estimates

G Designing Python class for a structured task

2 COCOGEN: Representing Commonsense structures with code

We focus on tasks of structured commonsense generation. Each training example for such tasks is in the form (T, G), where T is a text input, and G is the structure to be generated (typically a graph). The key idea of COCOGEN is transforming an output graph G into a semantically equivalent program Gc written in a general-purpose programming language. In this work, we chose Python due to its popularity in the training data of modern CodeLLMs (Xu et al., 2022), but our approach is agnostic to the programming language. The code transformed graphs are similar in their format to the pre-training data of Code-LLMs, and thus serve as easier to generalize training or few-shot examples than the original raw graph. COCOGEN uses Code-LLMs to generate Gc given T, which we eventually convert back into the graph G

We use the task of script generation (PROSCRIPT, Figure 1) as a running example to motivate our method: script generation aims to create a script (G) to achieve a given high-level goal (T).

2.1 Converting (T, G) into Python code

We convert a (T, G) pair into a Python class or function. The general procedure involves adding the input text T in the beginning of the code as a class attribute or descriptive comment and encoding the structure G using standard constructs for representing structure in code (e.g., hashmaps, object attributes) or function calls. The goal here is to compose Python code that represents a (T, G) pair, but retains the syntax and code conventions of typical Python code.

For example, for the script generation task, we convert the (T, G) pair into a Tree class (Figure 1b). The goal T is added as class attribute (goal), and the script G is added by listing the nodes and edges separately. We first instantiate the list of nodes as objects of class Node. Then, the edges are added as an attribute children for each node (Figure 1b). For example, we instantiate the node “Take out several plates” as take_out_several_plates = Node(), and add it as a child of the node take_pies_out_to_cool.

While there are multiple ways of representing a training example as a Python class, we found empirically that this relatively simple format is the most effective, especially with larger models. We analyze the choice of format and its connection with the model size in Section 4.

2.2 Few-shot prompting for generating G

In our experiments, we used CODEX (Chen et al., 2021a) and found that it nearly always generates syntactically valid Python. Thus, the generated code can be easily converted back into a graph and evaluated using the dataset’s standard, original, metrics. Appendix F lists sample prompts for each of the tasks we experimented with.

This paper is available on arxiv under CC BY 4.0 DEED license.

Authors:

(1) Aman Madaan, Language Technologies Institute, Carnegie Mellon University, USA ([email protected]);

(2) Shuyan Zhou, Language Technologies Institute, Carnegie Mellon University, USA ([email protected]);

(3) Uri Alon, Language Technologies Institute, Carnegie Mellon University, USA ([email protected]);

(4) Yiming Yang, Language Technologies Institute, Carnegie Mellon University, USA ([email protected]);

(5) Graham Neubig, Language Technologies Institute, Carnegie Mellon University, USA ([email protected]).